Browser-based botnets are the T-1000s of the DDoS world. Just like the iconic villain of the old Judgment Day movie, they too are designed for adaptive infiltration. This is what makes them so dangerous. Where other more primitive bots would try to brute-force your defenses, these bots can simply mimic their way through the front gate.

Browser-based botnets are the T-1000s of the DDoS world. Just like the iconic villain of the old Judgment Day movie, they too are designed for adaptive infiltration. This is what makes them so dangerous. Where other more primitive bots would try to brute-force your defenses, these bots can simply mimic their way through the front gate.By the time you notice that something`s wrong, your perimeter has already been breached, your servers were brought down, and there is little left to do but to hang up and move on.

So how do you flush out a T-1000? How do you tell a browser-based bot from a real person using a real browser? Some common bot filtering methods, which usually rely on sets of Progressive Challenges, are absolutely useless against bots that can retain cookies and execute JavaScripts.

The alternative to indiscriminately flashing CAPTCHA’s for anyone with a browser is nothing less than a self-inflicted disaster - especially when the attacks can go on for weeks at a time.

To demonstrate how these attacks can be stopped, here's a case study of an actual DDoS event involving such browsers; an attack which employed a swarm of human-like bots which would – under most circumstances - result in a complete and total disaster.

Browser-based Botnet: Attack Methodology

The attack was executed by an unidentified botnet, which employed browser-based bots that were able to retain cookies and execute JavaScript. Early in the attack they were identified as PhantomJS headless-browsers.

PhantomJS is a development tool that uses a bare-bone (or "headless") browser, providing its users with full browsing capabilities but no user interface, no buttons, no address bar, etc. PhantomJS’s can be used for automation and load monitoring.

The attack lasted for over 150 hours, during which we recorded malicious visits from over 180,000 attacking IPs worldwide. In terms of volumes, the attack peaked at 6,000 hits/second for an average of +690,000,000 hits a day. The number of attacking IPs, as well as their geographical variety, led us to believe that this might have been a coordinated effort, involving more than one botnet at a time.

Throughout the duration of the attack we dealt with 861 different user-agent variants as the attackers constantly modified the header structure to try and evade our defenses. Most commonly, the attackers were using different variants of Chrome, Opera and FireFox user-agents.

It is interesting to note that, besides using human-like bots, the attackers also made an effort to mimic human behavior, presumably to avoid behavior-based security rules. To that end, the attackers leveraged the number of available IP addressed to split the load in a way that would not trigger rate-limiting. At the same time, by constantly introducing new IPs, the attackers made sure that the IP restriction would be just as ineffective. The bots were also programmed for human-like browsing patterns; accessing the sites from different landing pages and moving through them at a random pace and varied patterns, before converging on the target resource.

Methods of Mitigation

Incapsula’s Layer 7 security perimeter uses a combination of filtering methods, which create several defensive layers around the protected website or web application.

In this case the nature of the attacking bots allowed them to successfully bypass Progressive Challenges. As mentioned, the botnet’s shepherds also went to great length to evade our Abnormality Detection mechanisms, which they were able to do – at least to some extent.

However, by using a known headless-browser, the attackers left themselves open to detection by our Client Classification mechanism, which – interestingly enough – uses the same technology as our free plan 'Bot Filtering' feature.

Our Client Classification algorithms rely on a crowd-sourced pool of known signatures, consisting of information gathered from across our network. At the time of the attack, the signature pool held over 10,000,000 signature variants, each of them containing an information about:

- User-agent

- IPs and ASN info

- HTTP Headers

- JavaScript footprint

- Cookie/Protocol support variations

In the context of browser-based visitors, this means that we are looking not only at the more apparent factors (like user-agent or their correlation to origin IPs), but also at the intricate nuances that exist within each browser.

Security is a closed hand game, so it would be hard to explain this without exposing some of our methods. Still, to provide some context, we can say that (on the low end) this means looking at minor differences in the way browsers handle encoding, respond to specific attributes, etc. For example, we can learn about our visitors from the way their browser handles HTTP Headers with double spacing or special characters.

The point is, our database holds tens of thousands of variants for each known browser or bot, to cover all possible scenarios (e.g., browsing using different desktop or handheld devices, going through proxies, etc.). Best of all, in this case, the attacker's weapon of choice - the PhantomJS webkit - is one of those signatures.

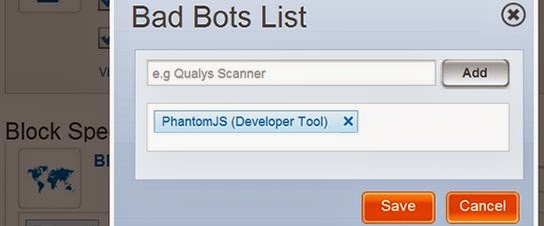

And so, while the attacker were ducking and diving to make their bots look like humans, all our team really had to do was to let our system discover the type of headless-browsers they were using. From there it was a simple task of blocking all PhantomJS instances. We even left a redemption option, offering the visitors to fill a CAPTCHA, just in case any of them were real human visitors.

Not surprisingly, no such CAPTCHAs were filled.

Aftermath

The attacks continued past the point of mitigation. Days later, after we switched to auto-pilot, the attackers were still trying to come at us with new user-agents and new IPs, obviously oblivious to the real reason for their blockage. However, for all their T-1000s-like relentlessness, they were already iced. Their cover was blown and their methods, signatures and patterns were internally recorded for future reference.

No comments:

Post a Comment